Transforming Words into Visuals. Introduction to Text-to-Image Models

You've probably seen some stunning images online that look almost too surreal to be made without the help of technology. Chances are, many of those images were generated using artificial intelligence. In recent years, the field of AI has made significant advancements in image generation, pushing the boundaries of what is possible.

In this blog post, we will explore the history, development, and current state of text-to-image models.

History

The journey of AI-powered image generation began with the development of image-to-text models. Researchers realized that they could train deep learning models to understand the contents of an image and generate textual descriptions of what they "saw." This breakthrough opened up exciting possibilities for applications such as generating captions for images, aiding visually impaired individuals, and assisting in image search algorithms.

Building upon the success of image-to-text models, researchers then explored the inverse task: generating images from textual descriptions. This led to the birth of text-to-image ML models, which marked a significant leap forward in the field of AI-generated images.

How they work

Text-to-image diffusion models are advanced machine learning models that can generate realistic images based on textual descriptions called prompts. They use a technique called diffusion, which involves iteratively refining a generated image through a series of steps. Without going into technical details this is a simplified explanation of how they work:

Once the model receives a textual description of the image it converts the input text into a numerical representation that the model can understand. This encoding captures the semantic meaning of the text.

The model then starts with a random noise image and applies a series of diffusion steps. During each step, the model updates the image to make it gradually more realistic according to the provided text description. The model uses complex mathematical operations to manipulate the image pixels based on the encoded text.

The diffusion process involves multiple iterations, where the model refines the image at each step. Each step enhances the image's quality by adjusting its details, colors, shapes, and textures, based on the encoded text. The number of diffusion steps can vary depending on the model architecture and settings.

After the diffusion steps, the model produces a final image that matches the given textual description as closely as possible.

Various Models

Probably the first well-known model that the general public had access to use was DALL-E by OpenAI. DALL-E is a variation of the GPT (Generative Pre-trained Transformer) architecture and is specifically designed to generate images from textual descriptions.

Another famous alternative is Midjourney. While DALL-E is more famous for its artistic creations, Midjourney is best at fantasy styles.

Although there are other models like Google Imagen, they have not been made available for public use yet.

However, Stability AI has taken a different approach by not only allowing public access to its models but also open-sourcing the source code of its model known as Stable Diffusion. By being an open-source project, Stable Diffusion enables researchers and developers to explore and experiment with the model, making modifications and improvements based on their specific requirements. This has also sparked the development of other fascinating projects that are built upon the foundation of Stable Diffusion.

Features exploration

Image generation models can accomplish various tasks beyond generating images from text descriptions. Here are some of them:

Outpainting

Outpainting is a feature that helps users extend their creativity by continuing an image beyond its original borders. It adds visual elements in the same style or takes a story in new directions, simply by using a natural language description.

Inpainting

By inpainting we can make realistic edits to existing images from a natural language caption. It can add and remove elements while taking shadows, reflections, and textures into account.

Prompt: Add a flamingo beside the pool.

Image to Image

ML models can also generate a new image based on an input (reference) image where the output image shares some desired characteristics with the input image. This can include tasks such as image colorization, style transfer, image inpainting, and image super-resolution.

ControlNet

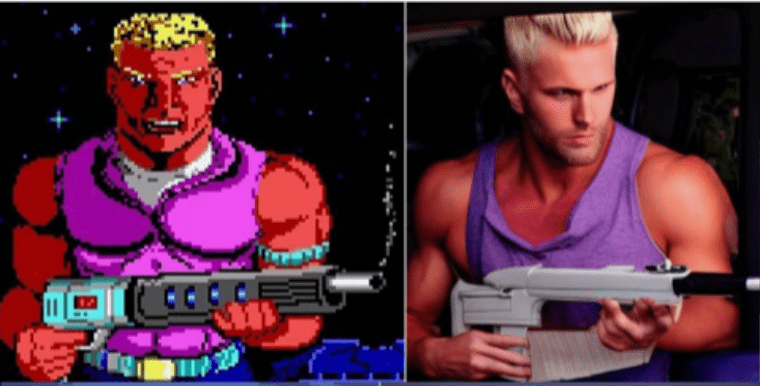

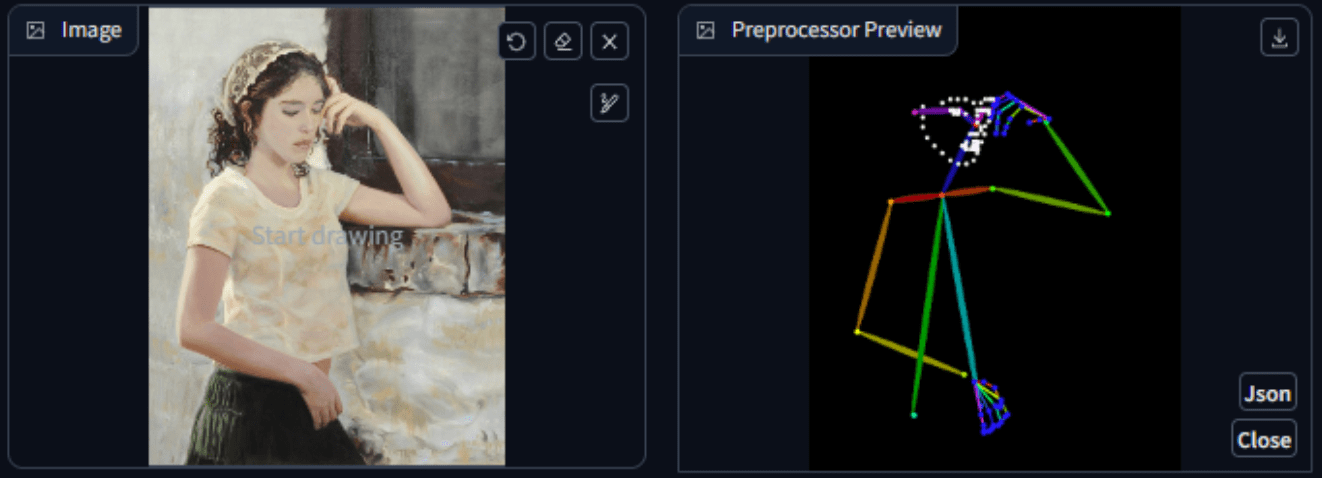

ControlNet creates an image based on another one too but gives more possibilities to control it. ControlNet is capable of creating an image map from an existing image, so you can control the composition and human poses of your AI-generated image.

Fine tunning

To make AI-generated images even more personalized, fine-tuning techniques have been developed. DreamBooth, Lora, and similar approaches enable users to train models using their datasets, allowing them to generate images that align closely with their preferences or creative vision.

For example, you might have seen images of people on social media as various superheroes or fantasy characters. The apps that do that leverage fine-tunning techniques that train AI models on specific people and then via prompt (textual description) place them in different places as different characters.

Conclusion

The field of AI-generated images is evolving rapidly, pushing the boundaries of what was once considered impossible. Text-to-image models have already begun disrupting industries such as advertising, fashion, and interior design by enabling efficient and cost-effective creation of high-quality visuals. As these models continue to advance, they have the potential to revolutionize various sectors, including virtual reality, gaming, e-commerce, and content creation, by automating image generation, reducing production costs, and unleashing creative possibilities.